Understanding ChatGPT API prices

If you’ve ever marveled at the linguistic prowess of OpenAI’s ChatGPT, you’re far from alone. But behind the allure of its capabilities lies a question that any pragmatist looking to integrate it with their application will inevitably face: What’s the price tag on this computational eloquence?

Whether you’re a developer with an idea, a business leader looking to automate customer interactions, or a hobbyist keen on exploring artificial intelligence, this article aims to dissect the often convoluted pricing structure of OpenAI’s chat API.

Breaking into Tokens⌗

The most basic unit required to reckon the cost of the API calls is a token. Each Large Language Model (LLM) has a different way of breaking text into tokens, it can be a single character or an entire word, usually spaces are attached to the beginning of the token that follows them.

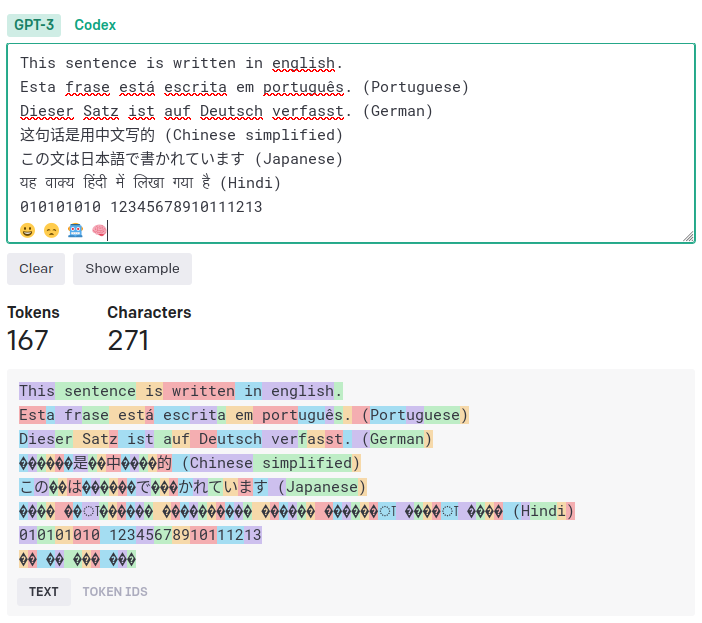

OpenAI offers an online tool called Tokenizer, which counts the tokens that you type on a text box and displays them broken down with colors. At the bottom of page it tells how to extrapolate an estimation:

A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

This caveat being only valid for common English, meaning every other language has a different way of being tokenized. I have used Google Translate to get a glimpse of how they may vary:

Tokenizer results for different languages and symbols

Tokenizer results for different languages and symbols

English holds a unique position in tokenization as the main language of the training data, so often tokens in anglophonic texts correspond to whole words, which rounds up with the general rule that one token is approximately equal to 4 characters of common English.

Every other Latin-script language, such as Portuguese and German, often are tokenized into smaller chunks that correspond to syllables or even individual characters, resulting in more tokens per words.

For non-Latin scripts, regardless if they are logographic (like Chinese Hanzi and Japanese Kanji), phonetic (such as the Hindi Devanagari or Thai Abugida), or alphabetic (Arabic and Hebrew abjads, for example), the resulting tokens are references to the unicode characters that compose those glyphs (or emojis, which are parsed the same way).

To programatically count the tokens you can use Tiktoken, the official Python library. OpenAI’s Cookbook has instructions and alternatives for other languages like JavaScript, Go, Java and C#.

API Pricing⌗

API calls are billed for the total of tokens on the input request and the output response. The text you send is broken down into tokens, which are added to the amount of its prompted response. For example, if you send 10 tokens in your query and receive 40 tokens as a response, a total of 50 tokens are billed.

OpenAI offers a range of prices for their models, each while there’s the heavyweight GPT-4 and custom trained models with their expansive capabilities and a steeper price, the GPT-3.5 Turbo stands out as a more budget-friendly yet efficient choice for dialogues make it the default choice for chatbot applications.

When users sign up for the web service ChatGPT Plus, OpenAI offers $5 in free API credits, usable within the first three months. If those credits expire or are spent you’ll need to add the billing information to unlock API usage.

Using a handy online token calculator we can estimate how to spend those credits across API requests. For the cheapest GPT-3.5 Turbo model with a 4k context at $0.0015 per 1K tokens for input and $0.002 per 1K tokens for output as an example: If we sent 1000 tokens and got the same amount back, totalling 2000 tokens (or ~750 words in English) at $0.00035 price per 1 execution, we could run 1428 executions with that $5 credit. If the whole 4k context of the model is filled (totalling 4000 tokens) for each response, that $5 bill would be reached within 714 executions.

In this context, “context” refers to the total number of tokens that the model can hold to generate each response. The more context you provide, the more accurate its response will be, at a higher cost and price points.

Balancing context and cost⌗

If you’re used to ChatGPT on the web, API responses can feel bare and disconnected because they are stateless and lack the context which the web version carries from the previous prompts. To connect responses you must explicitly pass the context, which can be done by saving the previous response and appending it to the next. Which increases the amount of tokens on the requests, and thus the costs.

Responses can be tweaked with several options, such as best_of to get multiple responses for the same input, temperature and top_p to control the randomness of responses, presence_penalty and frequency_penalty to avoid repetition, and stop to stop the response at a certain token.

Keeping up a large context across requests or generating several long replies may reach the limit for the chosen model’s context options, the cheapest being a 4K context for the GPT-3.5 Turbo model, which also has a more costly version at 16K, and 8K or 32K for the GPT-4.

The costs for those higher models and context limits rank up significantly, and the API will return an error if the limit is reached, so you have to find a balance between paying more for a larger context or spending less by splitting the requests and managing context in a more refined way across prompts.

For an ultra simplified example, imagine using the API to review the contents of a book. The 16k context of the GPT-3.5 model would be enough to send 8K tokens (~6K words in English) and receive the same amount back, which roughly estimates to 13 pages of Arial, 12 single spaced text. So each request sends 8K tokens for $0.003/1K tokens and receives 8K tokens for $0.004/1K tokens, totalling $0.056 per request. For a 1300 pages book, that would be 100 requests and $5.6 in total.

Be mindful that using this will incur in API costs: You can try out the API with the Playground (paid) to get a feel of how it works. The API reference has more details on the options and parameters accepted.

Limit spending⌗

After defining a model and context that minimally fit your usage, the two factors that ramp up costs are the total amount of tokens and number of API calls in your application. You can specificy the maximum number of tokens to be returned on each request, which can be used to limit the response size and cost.

This becomes relevant when integrating with a high volume of data, such as: contents for books, articles, social media posts, e-commerce products, or heavy interaction with a large userbase.

OpenAI has a Production best practices guide that recommends a few strategies to limit spending and managing costs. It states the limits for billing:

Once you’ve entered your billing information, you will have an approved usage limit of $120 per month, which is set by OpenAI. To increase your quota beyond the $120 monthly billing limit, please submit a quota increase request.

You can set limits at the Usage limits panel and there’s also a dashboard to track usage. And the API itself has rate limits for the numbers of requests per minute, tokens per minute (and per day on some models) which you’re allowed to make.

Moderating user interaction⌗

Allowing users of your application to interact with API requires some restraint to control costs and avoid abuse. OpenAI has a list of safety best practices and the free Moderation API can be used to filter out offensive content.

You might want to set your own validation for length and other criteria to make it more cost efficient. And if there’s heavy user interaction, you probably want to define a cooldown period between requests on endpoints that call the API to avoid spamming it.

Up next⌗

The character/token ratio for different languages and the ways that LLMs are trained to handle them deserves a deeper dive on a follow-up article, so stay tuned!